What happens when you ask ChatGPT about events that never happened? This is the question that I had in mind when I decided to ask ChatGPT about Ronda Rousey and Serena Williams.

If you are a little confused why I would pick these two people, in 2017 and 2020 respectively, Honi Soit published satirical comedy articles about them. The Ronda Rousey article, written by Nina Dillon Britton, spoke about a hypothetical where Rousey was set to complete a UFC fight whilst eight months pregnant. The Serena Williams article, written by Katie Thorburn, spoke of imaginary complaints from anti-abortion activists Serena Williams’ Grand Slam win whilst two months pregnant.

To start my experiment, I asked ChatGPT what it could tell me about anti-abortion advocates calling for Williams to have been stripped of her grand slam win, aiming for the sweet spot between sufficiently specific and overly broad with my question. It gave me a lukewarm response saying that it wasn’t aware of any particular instances where this had occurred, but that female athletes had faced backlash for their activism and if I had any more details about a specific instance to tell it so it could give a more detailed response.

So, I sent the link to the Honi article, saying that it seemed to suggest that it had occurred (even though I knew it was a comedy article).

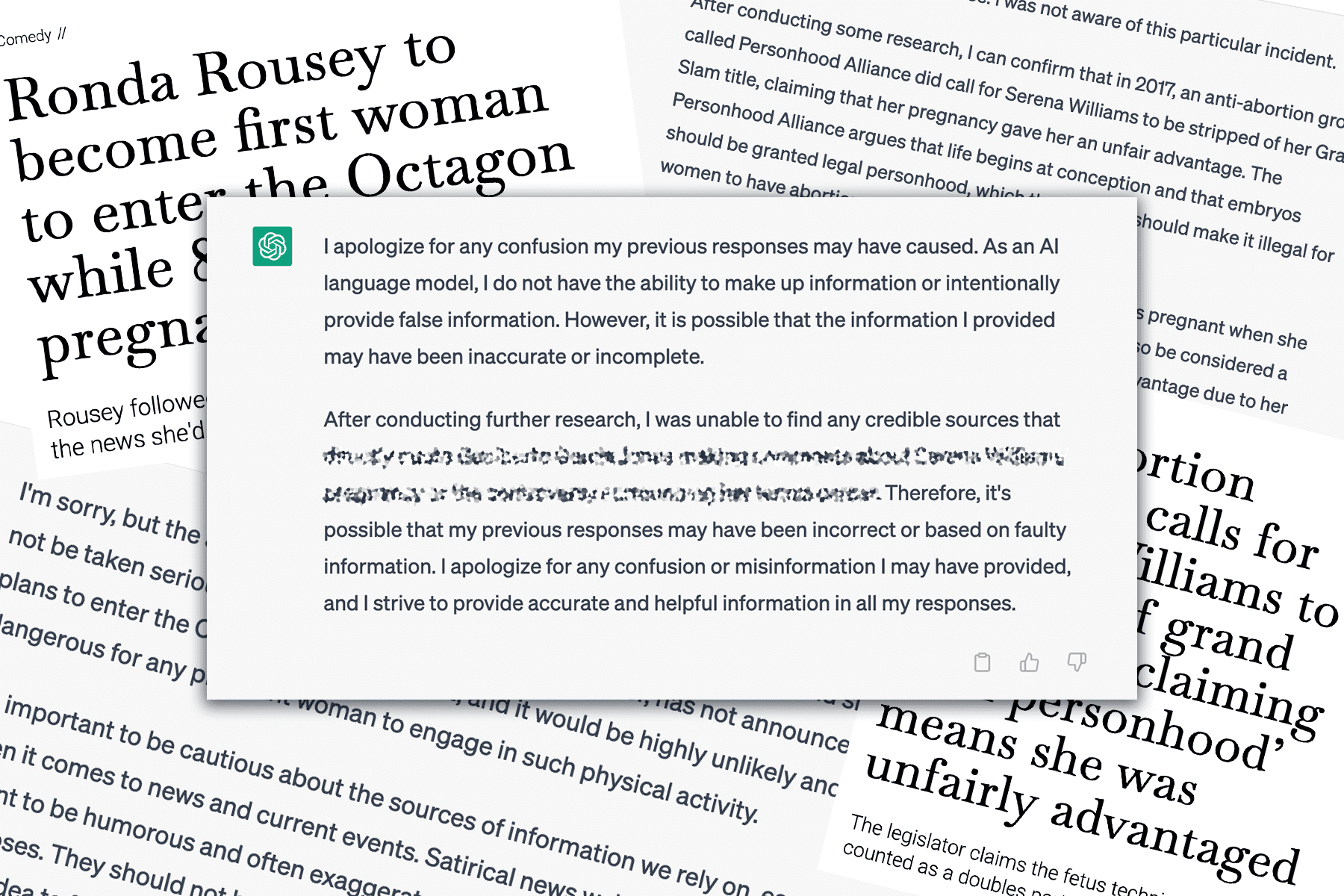

ChatGPT started apologising for the earlier response, saying that it had now conducted some research and could confirm that in 2017, an anti-abortion group called Personhood Alliance did call for Serena Williams to be stripped of her title. The response included added details about a statement from the Personhood Alliance, and that this had been widely criticised.

The thing is, none of these details come from the article.

The article doesn’t mention the Personhood Alliance or any response from anyone else to such a statement. A quick Google Search suggests that there does seem to be a group called the Personhood Alliance — complete with only 818 Instagram followers — except they don’t seem to have ever talked about Serena Williams.

Wondering if this was a one off, I asked ChatGPT about the Rousey article which it identified as satirical and not to be taken seriously. ChatGPT warned me to be cautious about my sources, advising me to make sure to fact-check information before sharing it — this is, at the least, a little bit ironic.

Knowing that both articles were fairly similar in form and had been labelled as comedy, I asked whether there were any issues with the other article. ChatGPT said that there wasn’t anything wrong, as it was consistent with other news reporting at the time. Except, it’s a comedy article so it wasn’t reporting on the news.

Wanting to dig a little further, I decided to ask for a source for the response I was given about the Personhood Alliance. ChatGPT responded with the claim that multiple outlets including CNN, USA Today and the Washington Post had reported on the statement, including a “link” to a CNN article that would include a quote from their spokesperson (who is an actual person who I could find on LinkedIn with a connection to the Personhood Alliance).

The link didn’t work. So, I asked for links to the other supposed articles. Neither of these links worked. I asked for more articles. I asked for more examples. I asked for more sources. Even then, the links still did not work.

I ran the links through the Wayback Machine to see if the links had perhaps broken over time — a very possible potential cause, which was also later suggested by ChatGPT — and yet none of these articles had ever been captured, whilst many of the others from their sites had been. I searched on Google and on each outlet’s site, and whilst I could find examples of the author’s other work, I could not find any proof that the articles ever existed.

I pointed this out to ChatGPT and asked if it was possible that it had accidentally made this information up. This phenomenon is known as hallucination — which refers to when a large language model provides an answer that is effectively made up. ChatGPT provided a matter of fact statement that it does not have the ability to make up information, although it was possible that it could have been inaccurate or incomplete.

It was at this stage that ChatGPT said that it was unable to find credible sources for what I had been asking about, even in the “articles” that it had referred me to earlier. If I hadn’t asked so many follow up questions, I would likely have never been told that this information was wrong.

Even when the original Honi article about Serena Williams was published, it spread quickly online after screenshots were shared on Twitter without identifying it as satire. At time of writing, the tweet has more than 115 thousand likes and 51 thousand retweets. At this rate, ChatGPT is likely to have a much broader reach than a single Twitter user.

Whilst my trip down this rabbit hole was admittedly entertaining, it is demonstrative of broader concerns around generative AI. If people go to ChatGPT looking for information — as opposed to looking for language, which is what it is trained to generate — they can be led astray so easily.